Facial Motion Capture: Integration Into the 3D Animation Pipeline

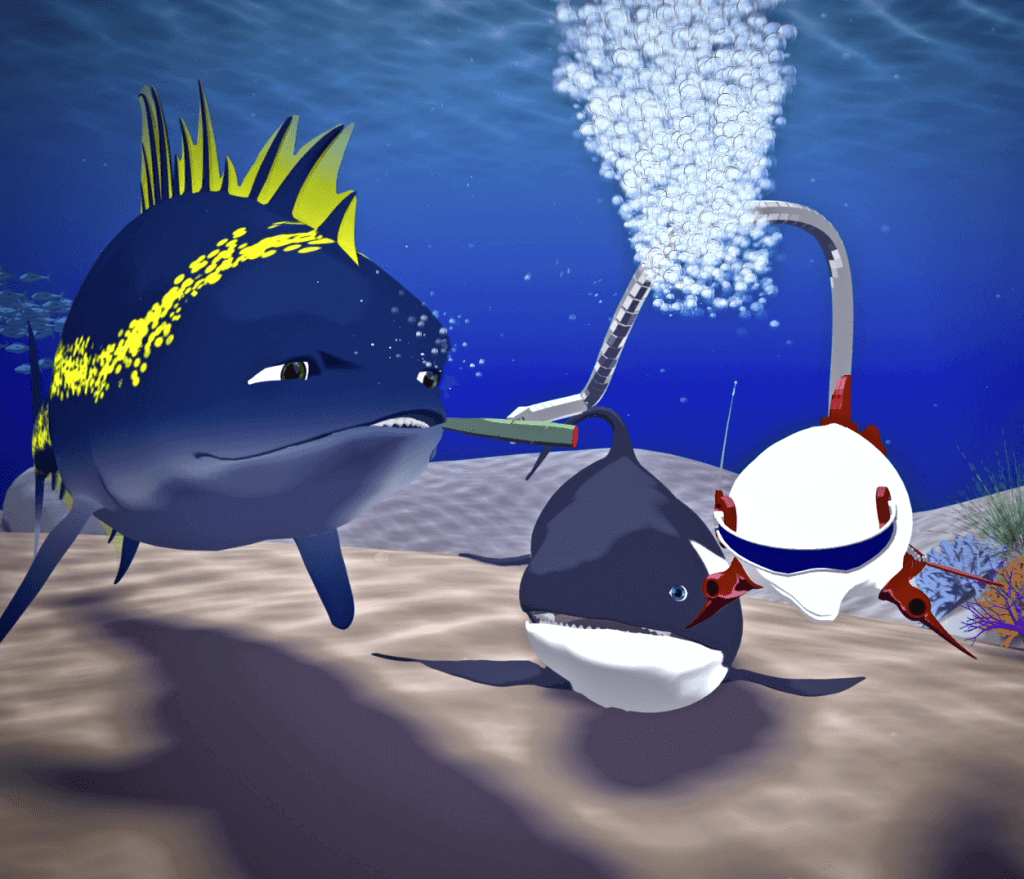

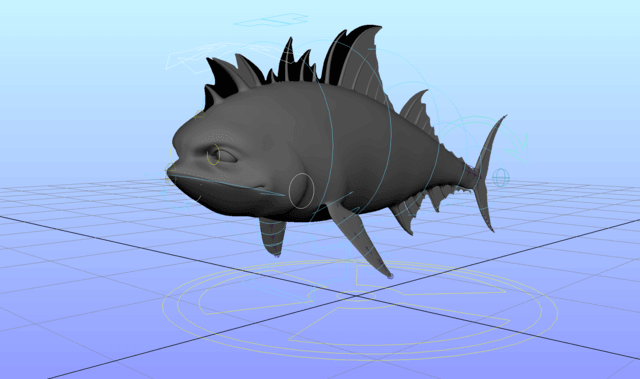

With all of the new types of motion capture popping up, I thought I would take a moment to talk about ECG’s workflow with FaceShift on our upcoming pilot episode of Tsunami Tuna. This blend shape motion capture software differs quite a bit from traditional marker motion capture workflows.

What is “Motion Capture”?

Motion Capture or Mo-cap is a method of translating movement from a live action subject, such as an actor, to a computer generated target, such as a cartoon character. Traditionally, this was done using multiple cameras in a large circle pointed at the same target markers. When the markers changed position in relation to one another, the cameras would record that data, which would in turn then be used to control the target character.

Similarly, markers could be placed on an actor’s face to record the distance between given points. Expanding technology has allowed for a different type of motion capture that does not require markers , known (not surprisingly) as “markerless” mo-cap. FaceShift is an example of a software solution that utilizes blend shapes to perform this type of motion capture.

What is a “blend shape”?

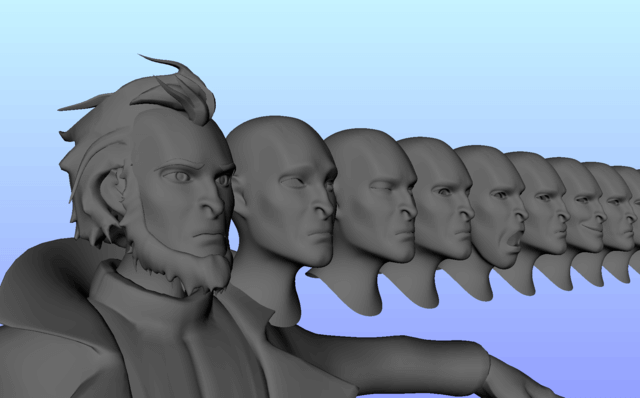

A blend shape, or morph target, is a copy of a 3D object/character that has been changed somehow. This slightly different “copy” can then be used to influence the original. For example, characters are typically given “blink” blend shapes in order to allow a character to blink. The original character model has a fully opened eye, whereas the “blink” blend shape has a fully closed eye. We can then animate the degree by which the original will morph to conform to the blend shape. If the influence is set to .5, the eye will be half closed as it would be halfway between the original and the fully closed “blink”.

How are blend shapes used in mo-cap?

One of the most powerful features of a blend shape is that it can be mixed with a near infinite amount of other blend shapes to create complex expressions. For example, you might mix the following blend shapes: right eyebrow up, left eyebrow down, pursed lips, and squinted eyes, to get a confused or puzzled expression. Blend shape mo-cap software deciphers an actors movements through the lens of that actor’s preset expressions. Before the actor ever performs, they go through a series of facial extremes in order to allow FaceShift to understand the performance. Those extreme positions (or facial poses) are stored with names like “left eye closed” to later be used to explain an actor’s performance. So, if the actor was to go from wide eyed to squinting, the left eye closed data might go from zero (completely open) to 0.7 (almost closed). This could be happening at the same time as, say, the mouth opening, or the eyebrows coming down. Once the actor’s expression profile is made, he or she can then perform a scene and have that performance translated into blend shape data. If we plug that data into the corresponding blend shapes of, say, a dolphin, then we can watch that dolphin perform the same scene mimicking the actor’s movements and expressions.

Why not just use a traditional marker mo-cap?

Marker motion capture is a very useful tool, and can be utilized for many important tasks. One of the main cons of marker mo-cap is that it relies on the distance and proportion of the actor’s face to drive the target face. This is not typically an issue if the actor is driving a humanoid character, but it becomes rapidly impractical when used to drive a character of a different face type such as an alien, fish, bird, dolphin, etc. This is where blend shapes show a particular usefulness, as the artist has direct control over what a given expression will look like with the target character.

Performance Driven Animation

One of the huge advantages to facial motion capture (aside from the fact that your expression/ lip sync work has been done for you) is that you have reference footage of the actor/actress to drive your animation performance. This does not by any means limit you to their actions, but it is a great place to find inspiration and to pick out small details that you might not have thought to animate otherwise. If you are going to use mo-cap, you should, of course, import the mo-cap data onto the target character(s) prior to animating.

I hope you enjoyed this post! Keep an eye out for our upcoming Tsunami Tuna pilot episode release later this year, as well as more animation related blog content from your’s truly. If you have any questions/requests/insults/death threats drop me a line in the comments below – or at chad@ecgprod.com

Cheers!